Setting up OpenTelemetry to send metrics and traces to Grafana Cloud

Observability is essential for understanding how your system actually behaves and for ensuring reliable performance and a solid user experience. As systems grow and evolve, you need consistent, vendor-neutral observability tooling - especially when you want to avoid getting locked into a single platform provider. Cost becomes a real concern as your usage scales: what seemed like an affordable package with low user volumes can quickly become expensive as usage ramps up.

The OpenTelemetry (OTel) project provides a standard set of protocols and tools for collecting telemetry data - metrics, logs and traces - and shipping it to whatever observability platform you choose: Grafana Labs, Elastic, Datadog, New Relic, you name it. You collect telemetry consistently across your platform and swap out exporters when your environment or providers change.

In this post, I'll walk through a straightforward Node.js setup using zero-code auto instrumentation - just environment variables, no code changes needed. We'll pipe the collected data to Grafana Cloud via Alloy, Grafana's OpenTelemetry-compatible collector.

Create a basic API

Let's set up a basic Node.js API to work with. Make sure you have Node.js installed locally, then initialise a project.

mkdir basic-api

cd basic-api

npm init -yCreate the index.js file which gives us a basic JSON over HTTP API.

// index.js

const http = require("http");

const server = http.createServer((request, response) => {

response.writeHead(200, { "Content-Type": "application/json" });

response.end(JSON.stringify({ message: "hello" }));

});

server.listen(3000, () => {

console.log(`Listening on port 3000`);

});Start up the API locally.

node index.jsAnd test the API, e.g.

❯ curl http://localhost:3000/

{"message":"hello"}⏎Perfect - now we have a basic API that we can instrument with OpenTelemetry to start gathering metrics and traces.

OTel auto instrumentation

Following the OTel auto instrumentation docs, we can inject instrumentation using just environment variables - no code changes required.

First, install the OTel packages we need:

npm install --save @opentelemetry/api

npm install --save @opentelemetry/auto-instrumentations-nodeNow we'll set environment variables to configure how we want to instrument this API. Let's

start with trace telemetry going to the console using the console exporter -

this gives us a quick look at the data being sent. We inject the auto

instrumentation via a require argument on the node process.

The instrumentation needs to happen before modules load (before we call

require('http')), which is why we add it as a node process argument.

Start up the API again with the appropriate environment variables set along with this "require" argument.

OTEL_TRACES_EXPORTER="console" \

OTEL_SERVICE_NAME="basic-api" \

node --require @opentelemetry/auto-instrumentations-node/register index.jsWhen we hit the API again, we should start seeing trace information logged to the console of the API service, e.g.

{

"resource": {

"attributes": {

"service.name": "basic-api",

"telemetry.sdk.language": "nodejs",

"telemetry.sdk.name": "opentelemetry",

"telemetry.sdk.version": "1.25.1"

}

},

"traceId": "50816433d8d5b682f253b4f45bd846e2",

"parentId": undefined,

"traceState": undefined,

"name": "GET",

"id": "80566118c92a9907",

"kind": 1,

"timestamp": 1724317279294000,

"duration": 3881.291,

"attributes": {

"http.url": "http://localhost:3000/hello",

"http.host": "localhost:3000",

"net.host.name": "localhost",

"http.method": "GET",

"http.scheme": "http",

"http.target": "/",

"http.user_agent": "curl/8.7.1",

"http.flavor": "1.1",

"net.transport": "ip_tcp",

"net.host.ip": "::1",

"net.host.port": 3000,

"net.peer.ip": "::1",

"net.peer.port": 54233,

"http.status_code": 200,

"http.status_text": "OK"

},

"status": { "code": 0 },

"events": [],

"links": []

}This is the data we'll pipe through to Grafana Cloud for visualization in the next sections.

Create a Grafana Cloud account

To actually use this telemetry, we need to get it somewhere we can visualize and make sense of it. An OTel collector handles the data collection and exports to whatever tooling you choose. OpenTelemetry provides a Collector implementation which works great in Kubernetes clusters with the OpenTelemetry Operator. But for now let's use Grafana Alloy, Grafana's collector implementation. The collector UI makes it easier to understand data flow initially, and Alloy pipes directly into Grafana Cloud. It's a solid starting point even if you switch to alternatives later.

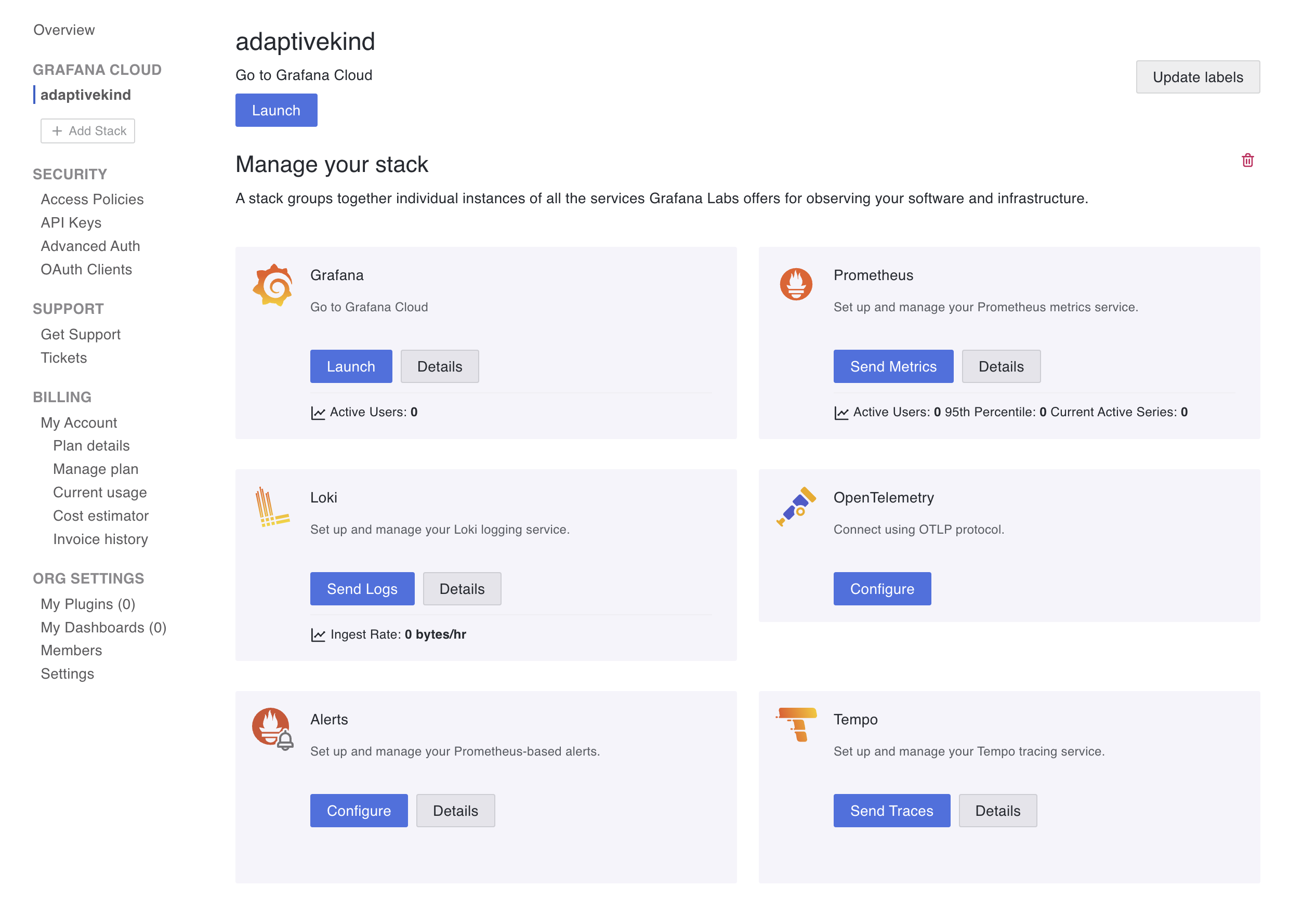

Sign up for a free Grafana Cloud account. Once you have an account set up, add a new stack. You should now see a dashboard for the stack.

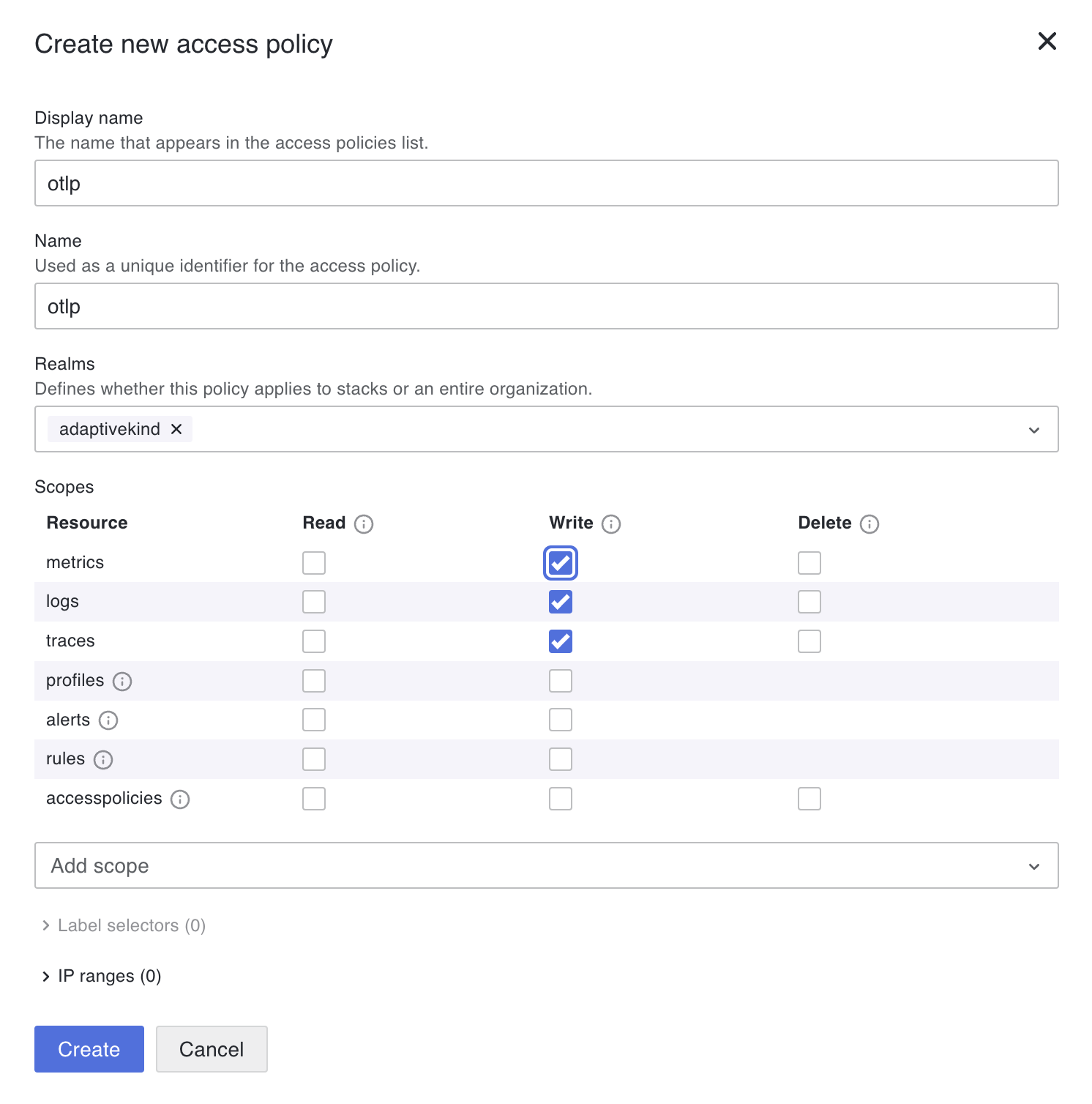

Create an access policy which we'll use for OTLP access by clicking on security -> access policies. Select your new stack name in the Realm field. Then select write for metrics, logs, and traces under scopes.

Add token for this access policy and stash the token somewhere safe. You'll need it in the next steps, and once you leave this screen, you can't retrieve it again from the Grafana portal.

Go back to the stack dashboard in the Grafana portal and click on Configure on the OpenTelemetry tile. Note down the Instance ID and the URL for the OTLP endpoint. You'll also need these in the steps below.

Set up the Alloy OTel collector

Create an Alloy configuration file locally.

// config.alloy

logging {

level = "info"

format = "logfmt"

}

// See live data going through the pipe in the Alloy UI

livedebugging {

enabled = true

}

otelcol.receiver.otlp "basic" {

http { }

output {

metrics = [otelcol.processor.batch.basic.input]

logs = [otelcol.processor.batch.basic.input]

traces = [otelcol.processor.batch.basic.input]

}

}

otelcol.processor.batch "basic" {

output {

metrics = [otelcol.exporter.otlphttp.default.input]

logs = [otelcol.exporter.otlphttp.default.input]

traces = [otelcol.exporter.otlphttp.default.input]

}

}

otelcol.auth.basic "default" {

username = "<YOUR_GRAFANA_CLOUD_INSTANCE ID>"

password = "<ACCESS_POLICY_TOKEN>"

}

otelcol.exporter.otlphttp "default" {

client {

endpoint = "<OTLP_ENDPOINT_URL>"

auth = otelcol.auth.basic.default.handler

}

}Set the Account ID, API token and URL for the OTLP endpoint that you got from the access policy token and the OpenTelemetry configuration in previous steps above.

Start up Alloy in Docker. I've set the argument --stability.level=experimental so that we can use the livedebugging option that has been set in the configuration file above. You'll see why that is useful shortly.

docker run \

-v ./config.alloy:/etc/alloy/config.alloy \

-p 12345:12345 \

-p 4318:4318 \

grafana/alloy:latest \

run --server.http.listen-addr=0.0.0.0:12345 --storage.path=/var/lib/alloy/data \

--stability.level=experimental \

/etc/alloy/config.alloyUpdate OTel options to export to Alloy

We can change the OTEL_TRACES_EXPORTER environment variable to otlp to export the traces via OTLP (OpenTelemetry Protocol). By default this will use port 4318 on localhost, which is being served locally by the Alloy in Docker container we started above. We'll also add resource detectors to annotate the trace telemetry with host meta data so it easier to see the source of the metrics when we come to visualisation of the data.

OTEL_TRACES_EXPORTER="otlp" \

OTEL_SERVICE_NAME="basic-api" \

OTEL_NODE_RESOURCE_DETECTORS="env,host,os" \

node --require @opentelemetry/auto-instrumentations-node/register index.jsThis is a good time to access the local Alloy UI at http://localhost:12345/.

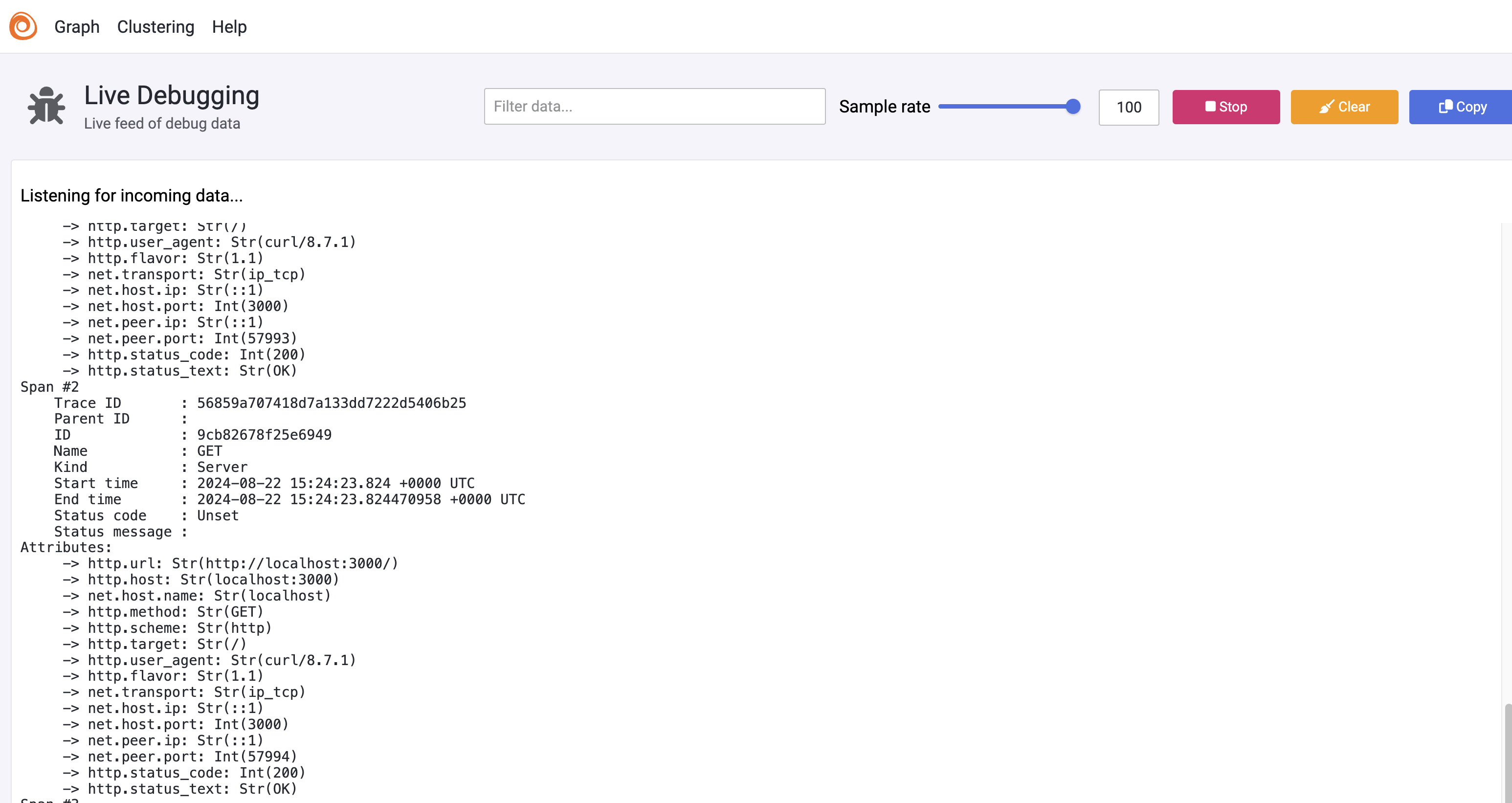

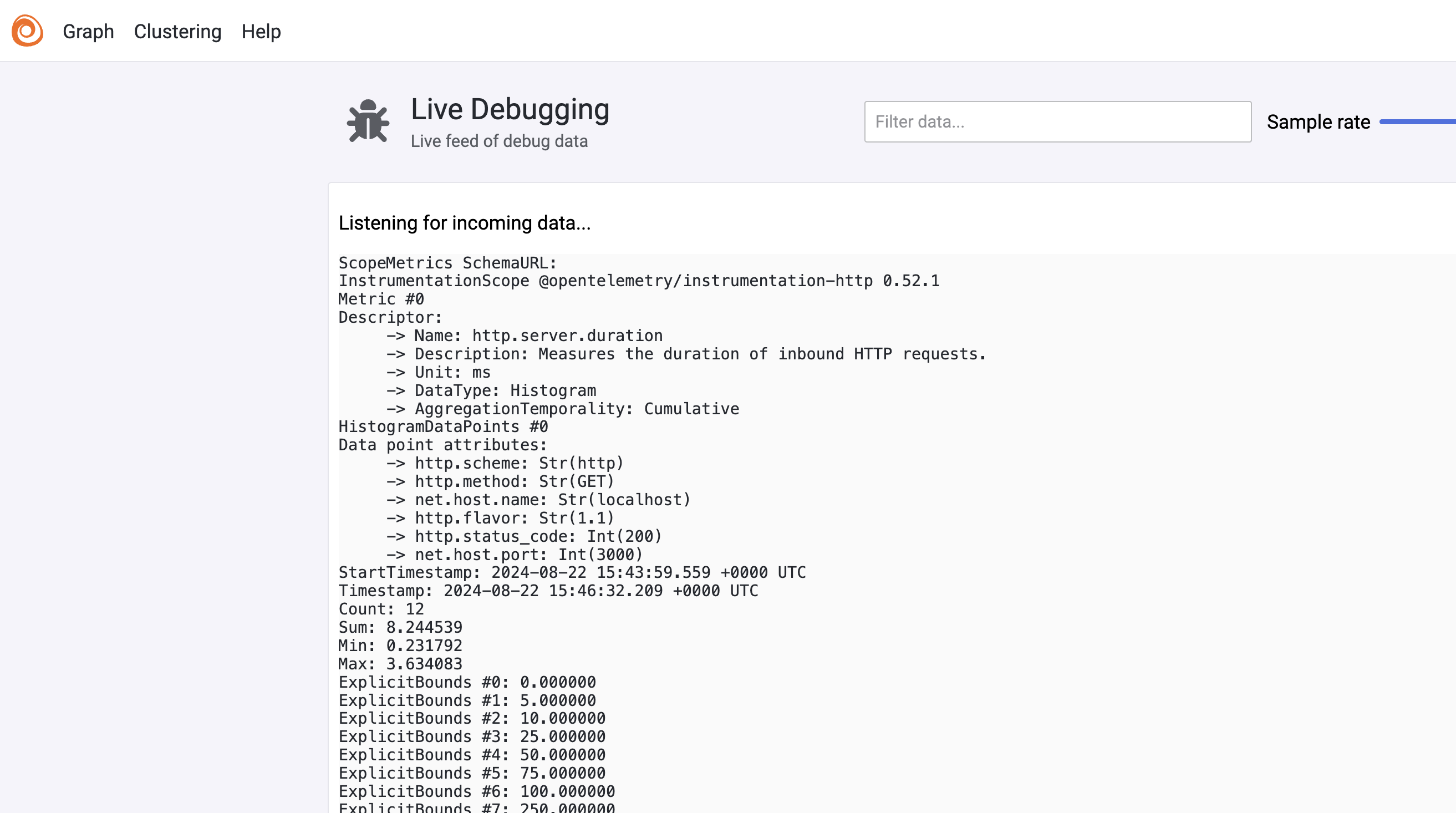

Click on Graph in the menu bar, select the tile otelcol.receiver.otlp.basic and click on

Live debugging. Now Access the API with curl http://localhost:3000 a few times to give us some

data. You should see some data coming down the pipe and (if you don't see any

other errors in the Alloy logs) the trace information should be on its way

to Grafana Cloud. If you do see some errors, then you may need to check the

credentials and endpoint URL you set in the alloy.config above.

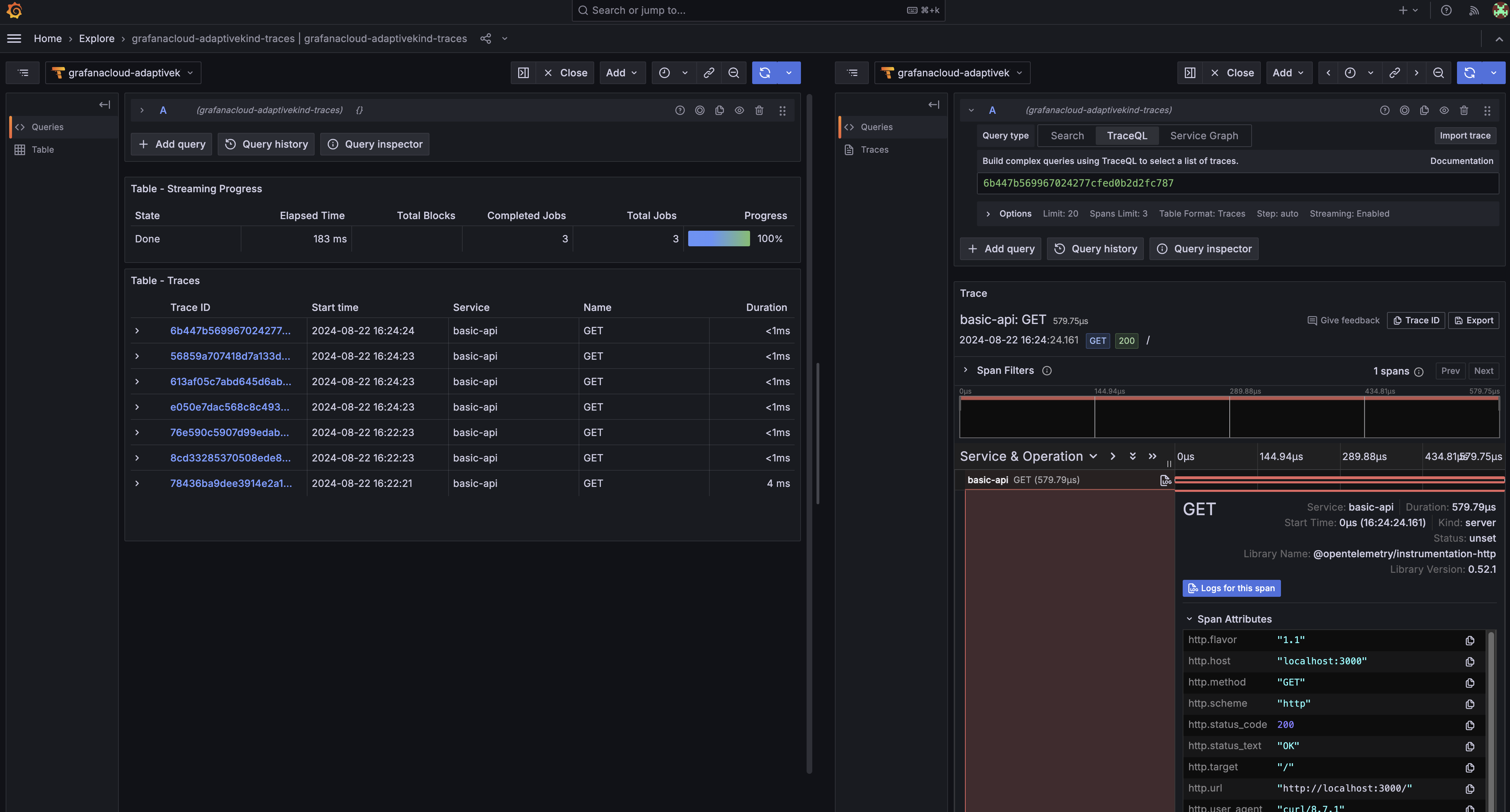

Back in the Grafana portal, launch your Grafana Cloud stack you set up earlier. Click through to Explore and select the Tempo data source. For my stack this is called grafanacloud-adaptivekind-traces. Click on Search and you should be able to see the traces that have been sent through from your local application.

Not much data yet, but we now have confidence that the telemetry is coming through.

Add metrics

If you've been following along and poking around the Grafana dashboard, you might have noticed that no metrics are showing up. The auto instrumentation does capture metrics for HTTP traffic, but those metrics aren't actually exported to the collector.

Auto Instrumentation documentation states. "Currently, only Traces are supported for environment variable configuration. See the open issues for Metrics and Logs to learn more."

Looks like this support is coming soon, but for now we can export the metric data by setting up explicit instrumentation code instead.

First, install the metrics exporter package:

npm install --save @opentelemetry/exporter-metrics-otlp-httpThen create a file called instrumentation.js with the following code where we explicitly export the metrics periodically.

// instrumentation.js

const { NodeSDK } = require("@opentelemetry/sdk-node");

const {

getNodeAutoInstrumentations,

} = require("@opentelemetry/auto-instrumentations-node");

const { PeriodicExportingMetricReader } = require("@opentelemetry/sdk-metrics");

const {

OTLPMetricExporter,

} = require("@opentelemetry/exporter-metrics-otlp-http");

const sdk = new NodeSDK({

metricReader: new PeriodicExportingMetricReader({

exporter: new OTLPMetricExporter(),

}),

instrumentations: [getNodeAutoInstrumentations()],

});

sdk.start();Start up the API, requiring this instrumentation code instead of the auto-instrumentations-node from before.

OTEL_TRACES_EXPORTER="otlp" \

OTEL_SERVICE_NAME="basic-api" \

OTEL_NODE_RESOURCE_DETECTORS="env,host,os" \

node --require ./instrumentation.js index.jsHit our API curl http://localhost:3000 a few more times. In the Alloy UI we

should now see metrics being collected every 60 seconds. Wait for a short while

to see these, e.g.

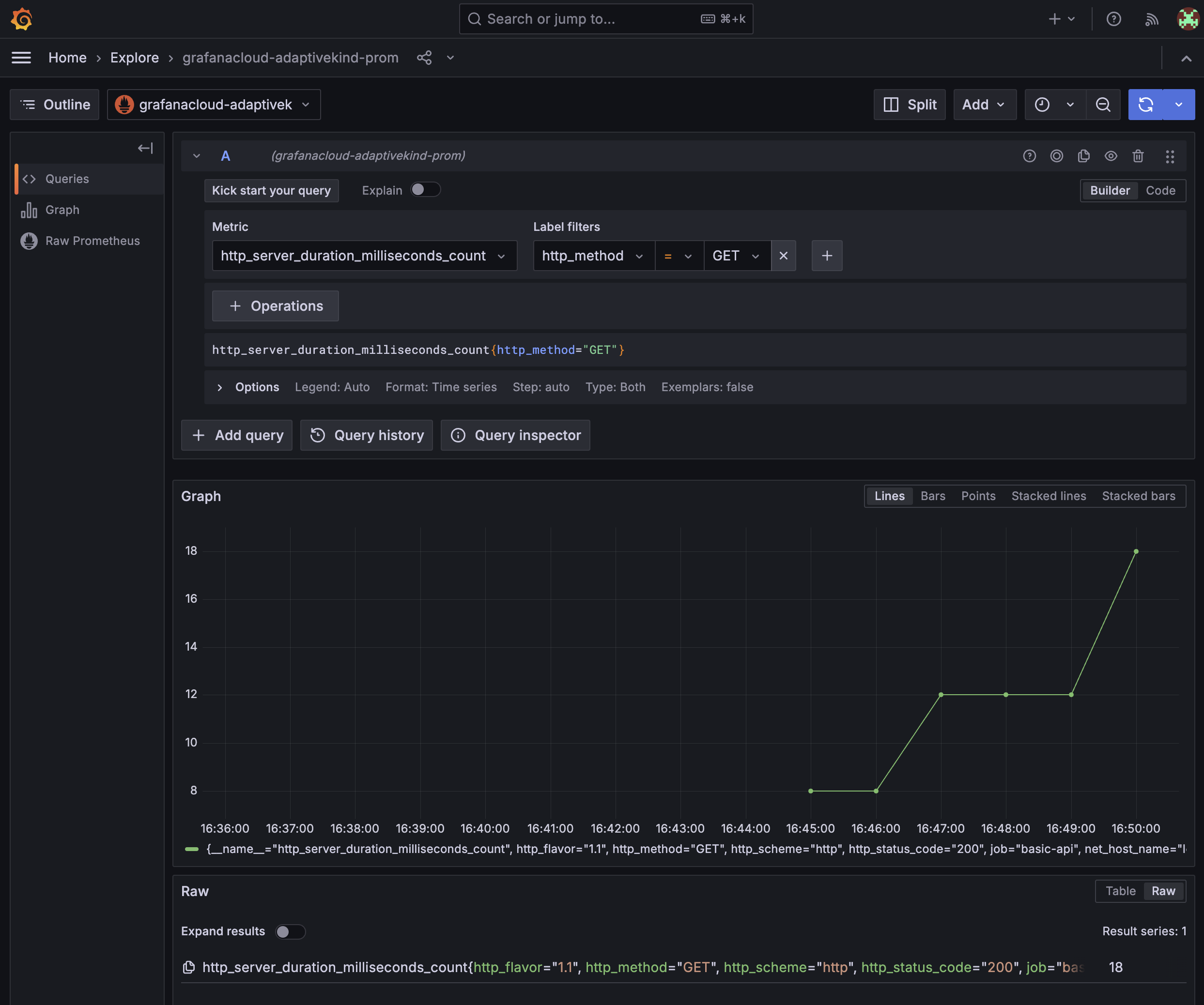

Now visit your Grafana stack dashboard. Go again to Explore in the dashboard, but this time select the Prometheus data source. You should be able to see the metrics coming through, for example count of GET requests coming through.

Enable application metrics in Grafana

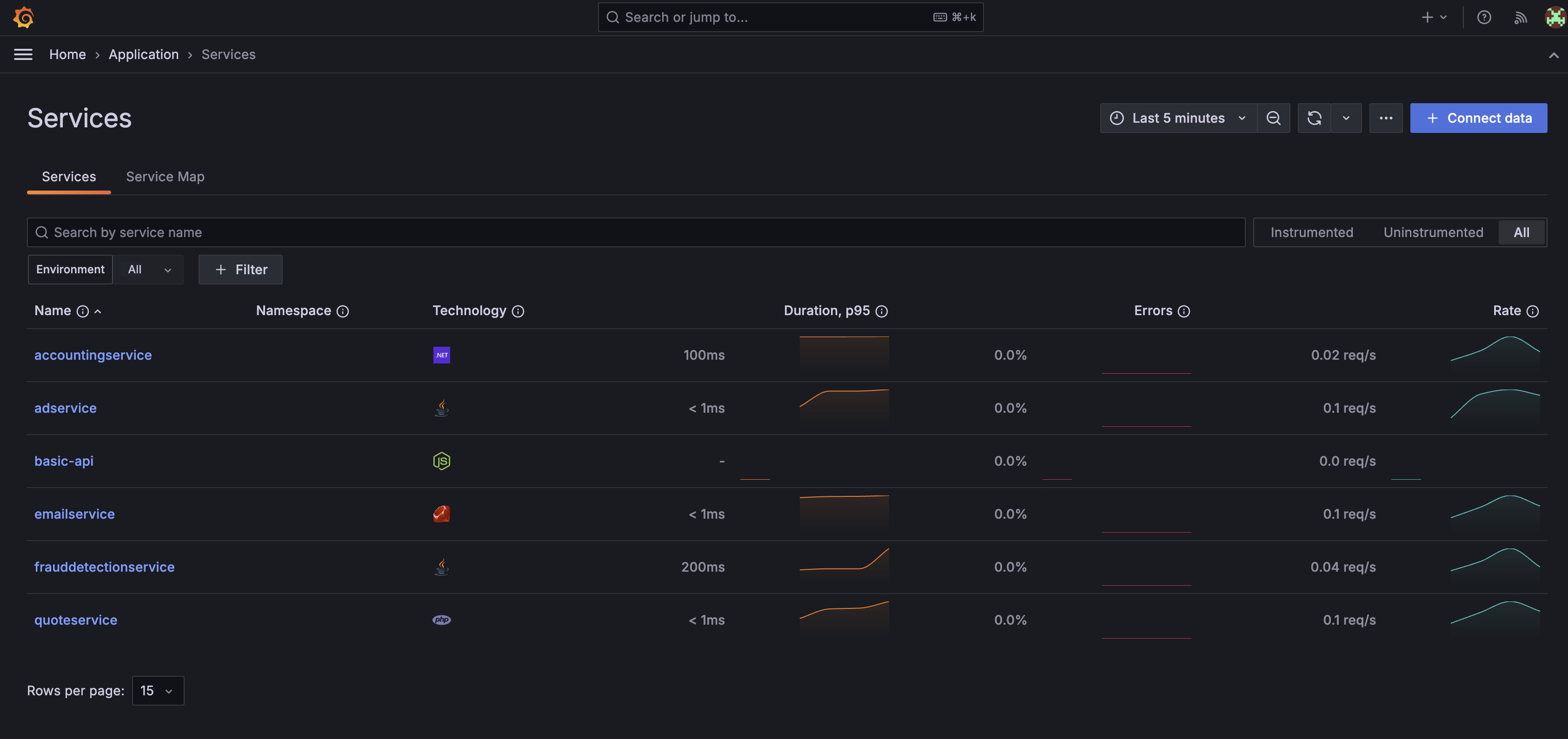

Click on Application in the Grafana dashboard and click the Enable metrics generation button. Agree to the Ts & Cs, although if you are on a free account then "There is no additional cost for Grafana Cloud Free accounts."

After 5 minutes or so the "basic-api" service should be visible and you should start see an overview of the service metrics. Not much data yet, but let's move on to the next step to load more telemetry so we can see this Application services dashboard come to life.

Run OpenTelemetry demo application

The OpenTelemetry demo application is a typical web application that will populate some telemetry. We can start this up and wire it up to the Alloy OTel collector we already have running. Note that this stack does have a component that puts load on the system. This is great to capture telemetry, but once you are finished do remember to turn it off as it will be using substantial CPU.

We set OTEL_COLLECTOR_HOST to host.docker.internal to point the telemetry from the demo to the Alloy instance we have running on localhost. Let's spin up the demo with docker compose.

git clone https://github.com/open-telemetry/opentelemetry-demo.git

cd opentelemetry-demo/

OTEL_TRACES_EXPORTER="otlp" \

OTEL_SERVICE_NAME="otel-demo" \

OTEL_NODE_RESOURCE_DETECTORS="env,host,os" \

OTEL_COLLECTOR_HOST=host.docker.internal \

docker compose up --force-recreate --remove-orphans --detachOnce that has started up you will be able to access the demo at

http://localhost:8080/, you can click around to generate some metrics, but

the load generator component is doing this hard work for you as well.

You should be able to see the metrics, traces and logs now coming into the Grafana dashboard. After a few minutes the Application service dashboard should start to populate.

It is worth exploring the data collected here if you are new to Grafana, however when you are done, stop the demo with.

docker compose downAlso stop the Alloy docker container and the local basic API process if you have that still running.

Summary

Hope you've found this OpenTelemetry walkthrough useful and can see how this approach works for instrumenting your stack. I've just used Node.js here, but the same principles apply to other languages supported by OTel.

Decoupling telemetry collection from your service code is really powerful. It separates concerns cleanly and lets you swap in different observability and monitoring implementations for different parts of your stack or different environments. You can configure the pipeline to control where telemetry goes and how it's filtered, all without your application needing to care about any of it.

The Grafana Cloud free tier is pretty generous too - you get most of the features from paid accounts. Access is limited to just a few users, but the limits are more than enough for experimenting with Grafana.

OpenTelemetry tooling can be incredibly valuable for vendor-neutral telemetry capture in whatever system you're building. Give it a shot!